Analyzing User Emotions via Physiology Signals

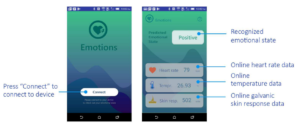

In this work, we consider the heart rate, body temperature and galvanic skin response sensors to design a wearable sensing system for

effective recognition of users’ emotional states. The proposed system allows recognizing three types of emotions, including positive, neutral and negative, in an on-line fashion.

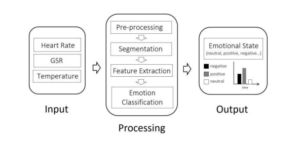

We apply the machine learning technology to process the physiology signals collected from the user.

The process consists of four main phases: data pre-processing, data segmentation, feature extraction, and emotion classification. We implement the prototype of

the system on Arduino platform with Android smart-phone. Extensive experiments on real-life scenarios show that the proposed system achieves up to 97% recognition accuracy

when it adopts the k-nearest neighbor classifier. In the future work, we will consider more types of user’s emotional states and consider to find a correlation between these emotions

and physical activities for more diverse and novel applications. Below is the demo video, source code and dataset of our system (https : //goo.gl/KhhcyA).

Role: Technical Writer

Description

Recently some studies have shown that the major influential factor of our health is not only physical activities, but the states of our emotion that we experience through our daily life, which continuously build our behavior and affect our physical health

significantly. Therefore, emotion recognition draws more and more attention for many researchers in recent years. In this works, we propose a system that uses off the-shelf wearable sensors, including heart-rate, galvanic skin response, and body temperature sensors, to read physiological signals from the user and apply machine learning techniques to recognize emotional states of the user. These states are key steps, toward improving not only the physical health but also emotional intelligence in advance human-machine

interaction. In this work, we consider three types of emotional states and conducted experiments on real-life scenarios, achieving highest recognition accuracy of 97.31%.